Introduction

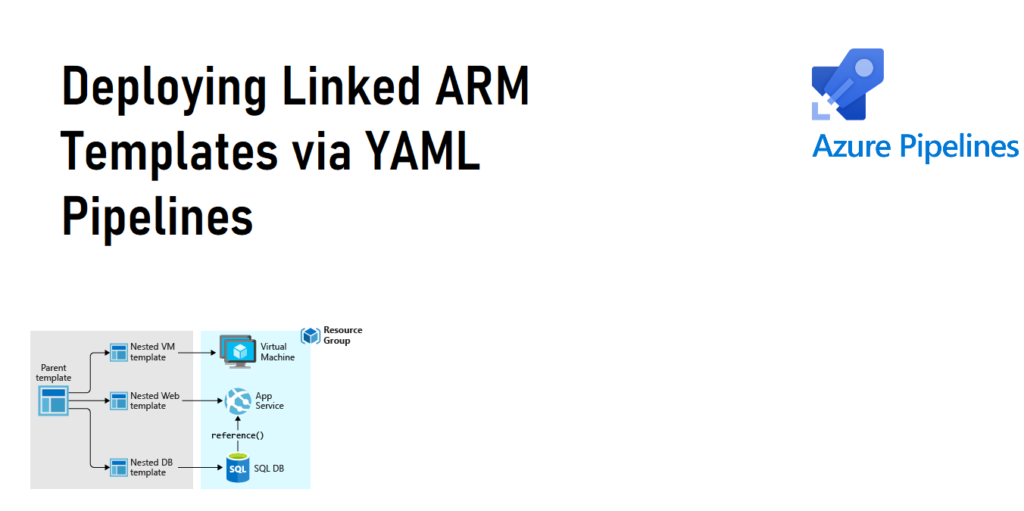

This was a fun one. For those unaware a Linked ARM template according to Microsoft documentation is:

To deploy complex solutions, you can break your Azure Resource Manager template (ARM template) into many related templates, and then deploy them together through a main template. The related templates can be separate files or template syntax that is embedded within the main template.

learn.microsoft.com

This is something I traditional do not recommend and advise to avoid as it usually is an indication that your ARM template has become too bloated and cumbersome to manage. However; my recent experience in creating YAML templates for Data Factory….where you cannot control the size of the template forced me to come up with an approach with tackling this deployment type. This post will walk you through deploying linked ARM Templates via YAML Pipelines.

How are Linked Templates Different?

Before diving in and developing an approach around Linked ARM Templates we first should really evaluate what are the differences we need to consider when leveraging linked templates over traditional single file ARM templates.

The first place that made sense, to me, was to evaluate the AzureResourceManagerTemplateDeploy@3 task. This task is one I’ve used before to deploy single template files and made sense to evaluate what inputs are required to preform a linked template deployment.

# ARM template deployment v3

# Deploy an Azure Resource Manager (ARM) template to all the deployment scopes.

- task: AzureResourceManagerTemplateDeployment@3

inputs:

# Azure Details

deploymentScope: 'Resource Group' # 'Management Group' | 'Subscription' | 'Resource Group'. Required. Deployment scope. Default: Resource Group.

azureResourceManagerConnection: # string. Alias: ConnectedServiceName. Required. Azure Resource Manager connection.

#subscriptionId: # string. Alias: subscriptionName. Required when deploymentScope != Management Group. Subscription.

#action: 'Create Or Update Resource Group' # 'Create Or Update Resource Group' | 'DeleteRG'. Required when deploymentScope = Resource Group. Action. Default: Create Or Update Resource Group.

#resourceGroupName: # string. Required when deploymentScope = Resource Group. Resource group.

#location: # string. Required when action = Create Or Update Resource Group || deploymentScope != Resource Group. Location.

# Template

templateLocation: 'Linked artifact' # 'Linked artifact' | 'URL of the file'. Required. Template location. Default: Linked artifact.

#csmFileLink: # string. Required when templateLocation = URL of the file. Template link.

#csmParametersFileLink: # string. Optional. Use when templateLocation = URL of the file. Template parameters link.

#csmFile: # string. Required when templateLocation = Linked artifact. Template.

#csmParametersFile: # string. Optional. Use when templateLocation = Linked artifact. Template parameters.

#overrideParameters: # string. Override template parameters.

deploymentMode: 'Incremental' # 'Incremental' | 'Complete' | 'Validation'. Required. Deployment mode. Default: Incremental.

# Advanced

#deploymentName: # string. Deployment name.

#deploymentOutputs: # string. Deployment outputs.

#addSpnToEnvironment: false # boolean. Access service principal details in override parameters. Default: false.A quick glance at this told me that I would require a csmFileLInk and a csmParametersFileLink for this task to run successfully.

Reviewing the details of this argument gave me another clue as to what will be required:

This now not only tells me the template files will need to be stored in an Azure Storage Account; however, it also tells me how to authenticate to the Azure Storage Account, a SAS token. This is not my first choice in technologies; however, my mind quickly developed a framework of steps that made sense to me around how to handle this situation in a secure manner.

The Steps

Now that the requirements, an Azure Storage Account and a SAS token, are know let’s frame out the steps with the following considerations.

- Secure

- Idopotent

- Self Purges

So with these requirements in mind let’s rewind and provide the logical steps and relate those to technical execution.

- Create a Storage Account and Container

- Move ARM templates to the Container

- Create a SAS token with a short expiration date (to balance simplicity + security will be one day)

- Deploy ARM template via Passing URI to the templates w/ SAS token to the deployment task

- Delete the Storage Account

Writing the Tasks

Steps are easy, writing the technical execution can be a challenge so to help translate here is my proposed solution to the steps and I will provide the code as we talk in detail for each one. To create step idempotency each task will execute exactly one step in the process. This means there will be more tasks here then outlined in the steps above

- Azure CLI to create a storage

- Azure CLI to create the container

- Azure CLI to create an expiration date and store as a Pipeline Variable

- Azure CLI to create a SAS Token and store as a Pipeline Variable

- Azure CLI to copy the pipeline artifacts to the storage account container

- Azure Resource Manager Template Deployment for Linked ARM templates

- Azure CLI to delete the Storage Account

The intent is that each task is responsible for one piece of the deployment task. This ensures an all or nothing approach. We want to designate and use the storage account for it’s intended purpose and lifecycle, in this case it will exist for the duration of the deployment. I am going to walk through each step; however, as a prerequisite one should be familiar with my YAML pipeline task template to execute CLI commands.:

parameters:

- name: azureSubscriptionName

type: string

default: ''

- name: inlineScript

type: string

default: ''

- name: displayName

type: string

default: ''

steps:

- task: AzureCLI@2

displayName: ${{ parameters.displayName }}

inputs:

azureSubscription: ${{ parameters.azureSubscriptionName }}

scriptType: 'pscore'

scriptLocation: 'inlineScript'

inlineScript: '${{ parameters.inlineScript }} 'This template will be reused for all the CLI tasks and the rationale behind leveraging it is we can control the task version and maintenance for each substantiated instance of the task.

Azure CLI to create a storage

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Create Storage Account for Linked Templates'

inlineScript: 'az storage account create --name ${{ variables.linkedServiceStorageAccountName }} --resource-group ${{ variables.resourceGroupName }}'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}Pretty straight forward. The variables here are being read in from a variable template file scoped at the job level. If unfamiliar with this check out my Microsoft Health and Life Sciences blog on this topic. The account name is unique and will use the Azure DevOps BuildID as part of the name to ensure uniqueness.

Azure CLI to create the container

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Create Container for Linked Templates'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

inlineScript: "az storage container create --account-name ${{ variables.linkedServiceStorageAccountName }} --name ${{ variables.linkedServiceStorageAccountContainerName }} "Again….nothing overly complicated just some simple commands. In this case the variables are being set at the job level.

Azure CLI to create an expiration date

inlineScript: "az storage container create --account-name ${{ variables.linkedServiceStorageAccountName }} --name ${{ variables.linkedServiceStorageAccountContainerName }} "

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Get SAS Expiration Date'

azureSubscriptionName : ${{ variables.azureServiceConnectionName }}

inlineScript: |

$date= $(Get-Date).AddDays(1)

$formattedDate = $date.ToString("yyyy-MM-dd")

echo "##vso[task.setvariable variable=sasExpirationDate;]$formattedDate"Note that we are also writing the expiration date and creating and ADO variable sasExpirationDate more on this later as it is something I learned when trying to pass in the SaS token. If writing an Azure DevOps variable via PowerShell script is new to you; here is more information on it.

Azure CLI to create a SAS Token

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Get SAS Token for Storage Account'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

inlineScript: |

$token= az storage container generate-sas --account-name ${{ variables.linkedServiceStorageAccountName }} --name ${{ variables.linkedServiceStorageAccountContainerName }} --permissions r --expiry $(sasExpirationDate) --output tsv

echo "##vso[task.setvariable variable=sasToken;issecret=true]?$token"Now this code will leverage the expiration date from the previous step to create the SaS token. Something to keep in mind is that this is a secret, as such we will Azure DevOps the secret is a variable. Now here is a fun bit of information I did learn in this process. The az storage container generate-sas command does not require an expiration. Originally I intended to exclude an expiration date, just to make things easier. However; I learned the SaS token value in the AzureResourceManagerDelpoyment task REQUIRES the se property. Which I learned is expiration value.

Azure CLI to copy the pipeline artifacts to the storage account container

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Copy Linked Templates to Azure Storage'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

inlineScript: az storage blob upload-batch --account-name ${{ variables.linkedServiceStorageAccountName }} --destination ${{ variables.linkedServiceStorageAccountContainerName }} --source ${{ parameters.workingDirectory }}${{ parameters.artifactName }}This snippet of code will now copy the pipeline artifact to the container we created in the storage which we also created. I am not using the SaS token here as Role Based Access (RBAC) is the preferred authentication method over a SaS token. Our Azure DevOps service principle has contributor access on the subscription. As such it has inherited the ability to copy files to the storage account.

Azure Resource Manager Template Deployment for Linked ARM Templates

- template: ../tasks/ado_ARM_linked_template_deployment_task.yml

parameters:

azureResourceManagerConnection: ${{ variables.azureServiceConnectionName }}

resourceGroupName: ${{ variables.resourceGroupName }}

location: ${{ parameters.location }}

csmFileLink: '${{ variables.linkedServiceStorageAccountURL }}/${{ parameters.templateFile }}$(sasToken)'

csmParametersFileLink: '${{ variables.linkedServiceStorageAccountURL }}/parameters/${{ parameters.environmentName }}.${{ parameters.regionAbrv }}.${{ parameters.templateParametersFile }}.json$(sasToken)'

overrideParameters: '${{ parameters.overrideParameters }} -containerUri ${{ variables.linkedServiceStorageAccountURL }}/linkedTemplates -containerSasToken $(sasToken)'All of the prework we have done is to get us to this point. Deploying a Linked ARM template. We have created our storage account, container, SaS token, and uploaded all the necessary files. Now we can pass in all the information. In this case the linkedTemplates is a folder in the container containing the master template and the parameters folder will contain the specific ARM parameter files.

From what I have gathered a SasToken is required since the Azure Resource Manager will handle the deployment internal vs a named service principle and/or user.

Azure CLI to Delete the Storage Account

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Delete Storage Account'

inlineScript: 'az storage account delete --name ${{ variables.storageAccountAbrv }}${{ variables.linkedServiceStorageAccount }}${{ parameters.environmentName }}${{ parameters.regionAbrv }} --resource-group ${{ variables.resourceGroupName }} --yes'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}Lastly I want the pipeline to clean up after itself and remove the storage account. The deployment architecture does not require the template to live pass the deployment. Leaving it out there creates a security loophole as anyone who has access to the storage account technically has a access to a copy of the source code since we directly copied it up there. Additionally, though small, the storage account will incur additional cost and could you imagine the portal experience if we had a storage account for every Azure deployment we made?

ARM Deployment Job

parameters:

- name: environmentName

type: string

- name: serviceName

type: string

- name: regionAbrv

type: string

- name: location

type: string

default: 'eastus'

- name: templateFile

type: string

- name: templateParametersFile

type: string

- name: overrideParameters

type: string

default: ''

- name: artifactName

type: string

default: 'ADFTemplates'

- name: workingDirectory

type: string

default: '../'

jobs:

- deployment: '${{ parameters.serviceName }}_infrastructure_lt_${{ parameters.environmentName }}_${{ parameters.regionAbrv }}'

environment: ${{ parameters.environmentName }}

variables:

- template: ../variables/azure_global_variables.yml

- template: ../variables/azure_${{parameters.environmentName}}_variables.yml

- name: deploymentName

value: '${{ parameters.serviceName }}_infrastructure_${{ parameters.environmentName }}_${{ parameters.regionAbrv }}'

- name: resourceGroupName

value: '${{ variables.resourceGroupAbrv }}-${{ parameters.serviceName }}-${{ parameters.environmentName }}-${{ parameters.regionAbrv }}'

- name: ARMTemplatePath

value: '${{ parameters.artifactName }}/${{ parameters.templateFile }}'

- name: linkedServiceStorageAccount

value: 'lt$(Build.BuildId)'

- name: linkedServiceStorageAccountName

value: ${{ variables.storageAccountAbrv }}${{ variables.linkedServiceStorageAccount }}${{ parameters.environmentName }}${{ parameters.regionAbrv }}

- name: linkedServiceStorageAccountContainerName

value: 'templates'

- name: linkedServiceStorageAccountURL

value: 'https://${{ variables.linkedServiceStorageAccountName }}.blob.core.windows.net/${{ variables.linkedServiceStorageAccountContainerName }}'

strategy:

runOnce:

deploy:

steps:

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Create Storage Account for Linked Templates'

inlineScript: 'az storage account create --name ${{ variables.linkedServiceStorageAccountName }} --resource-group ${{ variables.resourceGroupName }}'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Create Container for Linked Templates'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

inlineScript: "az storage container create --account-name ${{ variables.linkedServiceStorageAccountName }} --name ${{ variables.linkedServiceStorageAccountContainerName }} "

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Get SAS Expiration Date'

azureSubscriptionName : ${{ variables.azureServiceConnectionName }}

inlineScript: |

$date= $(Get-Date).AddDays(1)

$formattedDate = $date.ToString("yyyy-MM-dd")

echo "##vso[task.setvariable variable=sasExpirationDate;]$formattedDate"

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'CreateSASToken'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

inlineScript: |

$token= az storage container generate-sas --account-name ${{ variables.linkedServiceStorageAccountName }} --name ${{ variables.linkedServiceStorageAccountContainerName }} --permissions r --expiry $(sasExpirationDate) --output tsv

echo "##vso[task.setvariable variable=sasToken;issecret=true;isoutput=true;]?$token"

- template: ../tasks/azcli_inline_script_task.yml

parameters:

displayName: 'Copy Linked Templates to Azure Storage'

azureSubscriptionName: ${{ variables.azureServiceConnectionName }}

inlineScript: az storage blob upload-batch --account-name ${{ variables.linkedServiceStorageAccountName }} --destination ${{ variables.linkedServiceStorageAccountContainerName }} --source ${{ parameters.workingDirectory }}${{ parameters.artifactName }}

This the entire job listed out. The source code is available in my TheYAMLPipelineOne repository.

Conclusion

Managing and deploying Linked ARM templates isn’t easy. Hopefully you are a bit more confident in deploying linked ARM templates via YAML Pipelines. However; if we can template out these steps we can hopefully automate the deployment process, thus making it easier for developers and admins alike. If wanting to learn more on YAML templates check out resources on my personal blog and/or my contributions on the topic on the Microsoft Health and Life Sciences Blog.