Azure Cost Analysis Part 1: Getting the Data

Overview

This is the first part of a series in post whose intention is to export Azure Cost Data, transform it using Azure Data Factory, and finally overlay Power BI for analytic reporting. Realistically once the data is in the Data Lake you can pick any type of data reporting or integration tool. This would potentially include big data solutions like HDInsight and Databricks.

Getting the Data

We are pretty much going to follow the steps in Microsoft Documentation. At a high level this going to set up an extract to export the data to an Azure Storage account at a given interval. I highly recommend just sticking with last month’s export of the bill. The reason for this is it’s easier to begin with data that is static. Some of the daily or most recent cost data from Microsoft can shift as they collect data from all the resource types spread across all the regions. If you are more comfortable and familiar with getting daily extracts feel free to set that up as potentially an additional feed into your data source.

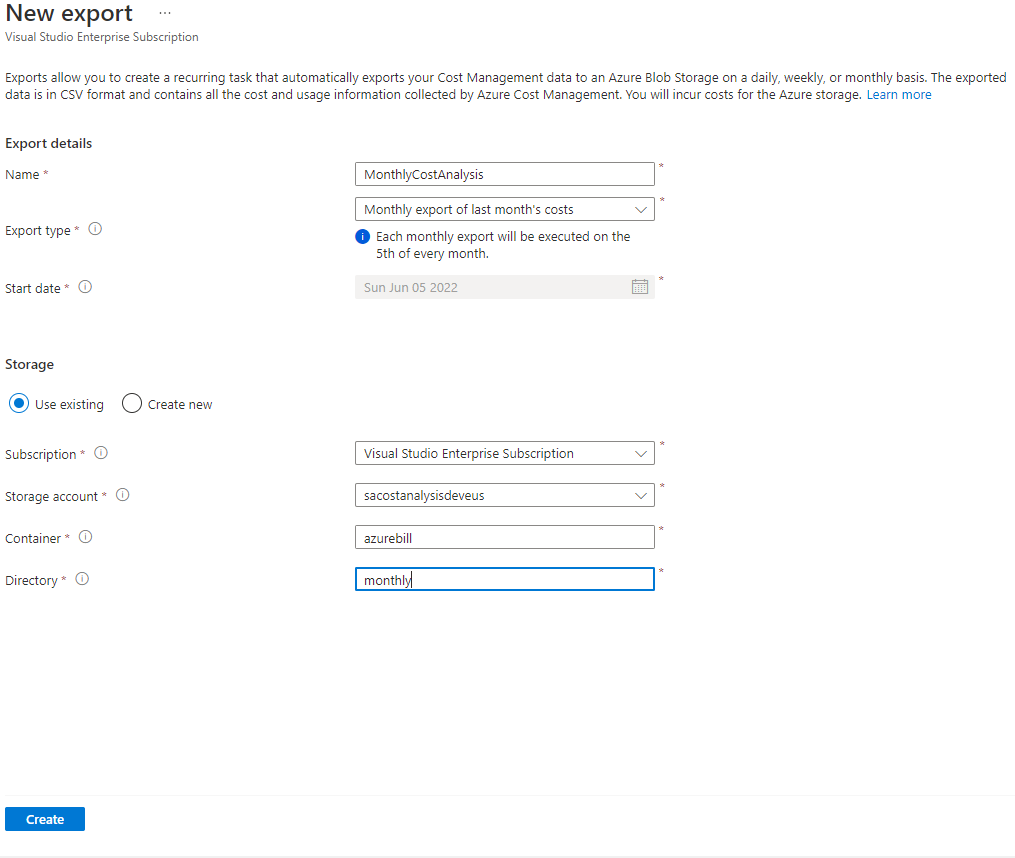

To get started navigate to Cost Analysis-Export in the portal. You will get a screen similar to the one below. I have already created a storage account sacostanalysisdeveus

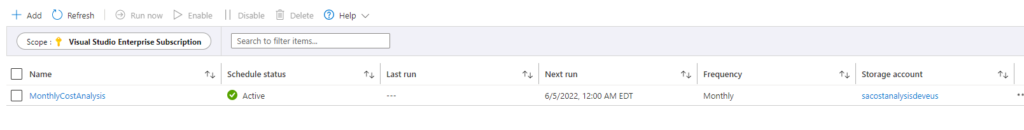

If successful you will see something like:

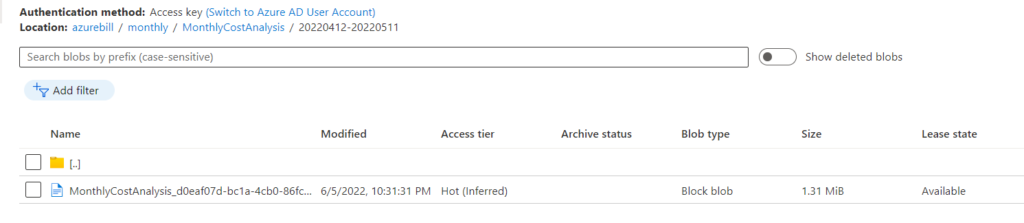

If following these steps the file itself will be placed in the storage account like below:

This can be downloaded as a .csv for further evaluation. The one thing I always tell customers is the fact the Azure tags will replicate all the way down to the bill. This is something that can be helpful when trying to structure and analyze Azure costs appropriately.

Conclusion

This will get you an automated export of your costing data. However; those that deal with data can quickly identity some of the limitations here.

First .csv’s aren’t the easier or most performant when trying to combine and analyze data. Secondly Blob storage can be a great resting place for static data; however, it is not the most secure or optimize for data analytics or data warehousing. The next couple articles will step through one way, leveraging Data Factory, to take the .csv, convert them to .parquet files for further processing. After that there will be another article covering how to take the .parquet files and do some light way transformation.

Discover more from John Folberth

Subscribe to get the latest posts sent to your email.